Working on my first multi-agent project has been nothing short of eye-opening. AI systems are really here to stay! Especially now that advanced LLMs are taking center stage and reshaping what’s possible. To keep these systems efficient and flexible, it helps to split one large agent-based setup into a collection of more specialized units, rather than relying on a single, one-size-fits-all solution.

Quoting LangChain, an agent is a system that uses an LLM to decide the control flow of an application. So, when should an agent be used? Agents can be used for open-ended problems where it’s difficult or impossible to predict the required number of steps, and where you can’t hardcode a fixed path.

Agents Go Agentic

- Empowering agents with Agency

Generative AI models can be taught to use external tools for real-time information or tasks, such as checking a database for past purchases or sending an email. Once these AI systems can plan and take actions on their own to reach a goal, they become agentic.

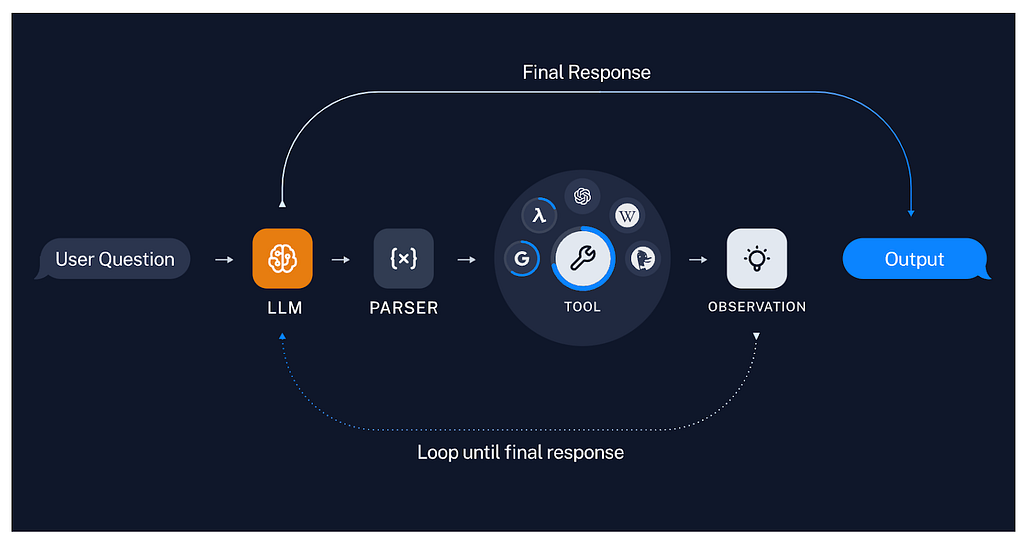

Research led by Shunyu Yao shows that agents work best when they alternate between their own internal thought process and external actions. Their ReAct framework demonstrates how a model can move between “thinking” and performing practical steps (like making an API call). This ability to plan and execute tasks in a self-directed way transforms a basic language model into what we now call an “agent.” An agent, therefore, is agentic and is capable of not only reasoning and processing input but also interacting with external tools, making decisions autonomously and iteratively until it achieves its goal.

Beyond the Monolith: Multi-Agent Conversational Systems

-why and when a multi-agent approach is beneficial?

The necessity of adopting a multi-agent system is intuitive in complex problems where collaboration and parallel coordination are in demand. At its core, the idea is simple: rather than relying on one giant, monolithic LLM call, we distribute tasks among specialized “agents.”

Moreover, adopting a multi-agent architecture, agents are best described as “team members” each powered by an LLM, specific prompt, tools and internal logic. Every agent handles its own role, which makes the overall system more efficient and helps things move faster. They all work inside a shared framework that helps orchestrate the flow of the conversation .

Within the LangChain ecosystem, LangGraph offers a modular framework designed to make multi-agent workflows easier to manage. It provides the structure needed for effective coordination among AI agents, resulting in a smoother, well-organized collaboration all around.

This ecosystem introduces chains which incubate sequences of calls — whether to an LLM, a tool, or a data preprocessing step linked together. Once set up agents and their chains can be wrapped up in an API layer, or other deployment solution, to make it accessible to other applications.

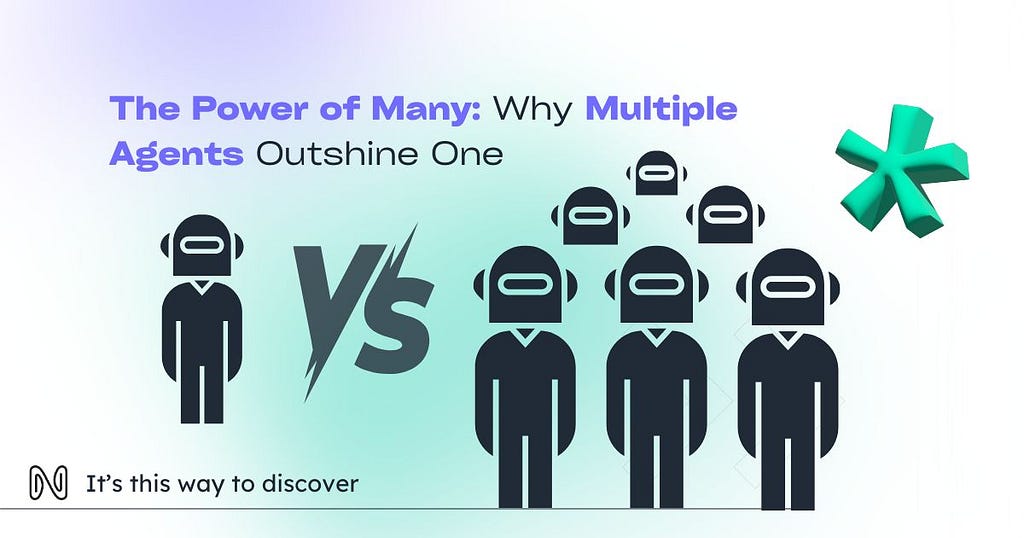

The figure compares four levels of LLMs autonomy in cognitive architectures.

- LLM Call: In this setup, the code hands a single query to the language model and receives a one-off answer. The LLM determines only the content of its response, while the code retains control over every other part of the flow.

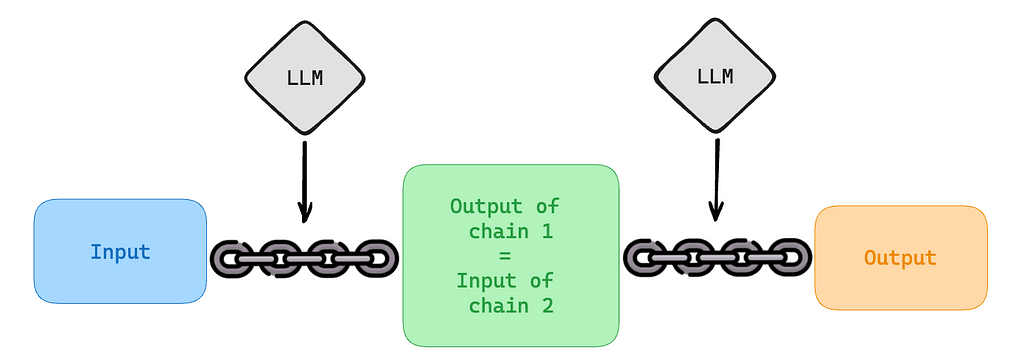

- Chain: A chain links multiple LLM calls together. The model still generates each response, but the sequence of steps is dictated by the code. It allows for more complex interactions than a single step, yet follows a predetermined structure.

- Router: Here, the language model decides which branch of logic to follow at each stage, but the possible paths themselves are laid out by the code. This approach balances flexibility with a defined set of routes.

- Agent: An agent is fully autonomous. It chooses how to respond, which step to take next, and even what tools to use. There are no fixed instructions, letting the LLM adapt freely and steer the entire process on its own.

Unpacking the Core Components

-Technical building blocks

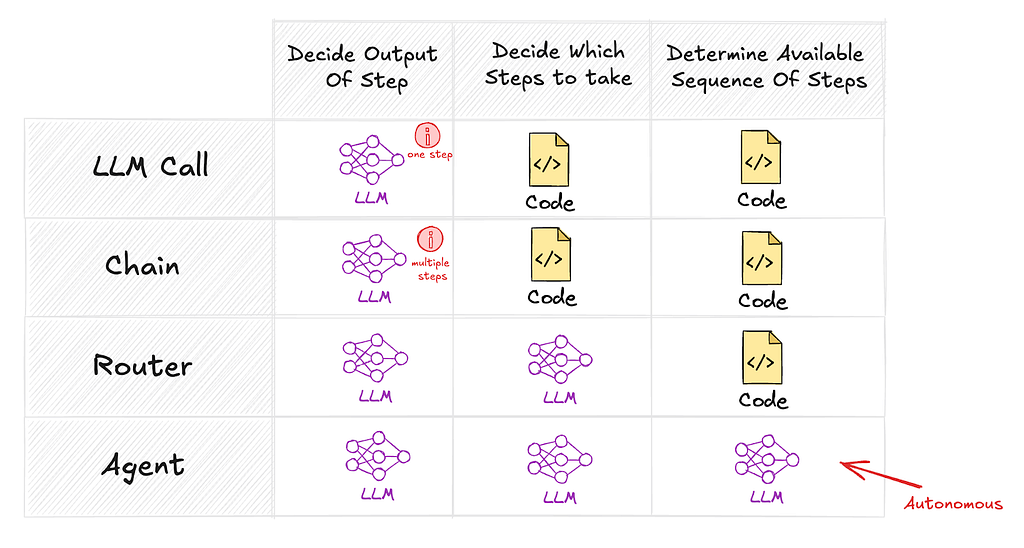

Many in the research community have built AI agents atop LLMs, marking a significant step forward. While different teams might use varying terminology (e.g. “Brain, Perception, and Action” (as in The Rise and Potential of Large Language Model Based Agents: A Survey) versus “Tool, Reasoning, and Execution”), the underlying roles remain consistent: processing input, making decisions, and taking action.

For example, imagine a virtual agent designed to help you book a train ticket. In this scenario, a user interacts with a conversational AI that handles queries about travel dates, departure and arrival cities, checks train availabilities, performs the booking, and finally sends an email with the train ticket. This example highlights how agentic systems can seamlessly integrate complex decision-making into everyday tasks, by breaking down the process into specialized steps managed by dedicated agents.

The “Brain” corresponds to the LLM itself, which handles reasoning and decision-making. “Perception” broadens the agent’s input beyond text, allowing it to gather multimodal information from its environment. Finally, “Action” involves responding or using external tools. Although this framework might evoke the idea of physical mobility, it applies just as well to conversational agents whose “Action” is purely digital.

After explaining the fundamentals of tool, action, and reasoning — the trifecta that drives decision-making in these systems — I found it essential to dive deeper into the mechanisms that connect these parts: chains and tools.

Chains:

-The Seamless Sequences

I was genuinely surprised at how elegantly chains can encapsulate complex workflows. In a multi-agent system, chains are essentially sequences of processing steps. They can work sequentially — where each output seamlessly feeds into the next step — or even in parallel, using map/reduce techniques to handle large datasets. There’s also the router chain, which dynamically directs inputs to the appropriate sub-chain based on context. For me, the counterintuitive aspect was that while the LLM is doing the heavy lifting in terms of reasoning, the real magic happens in the chaining. It’s the glue that turns isolated LLM calls into a coherent, adaptable process.

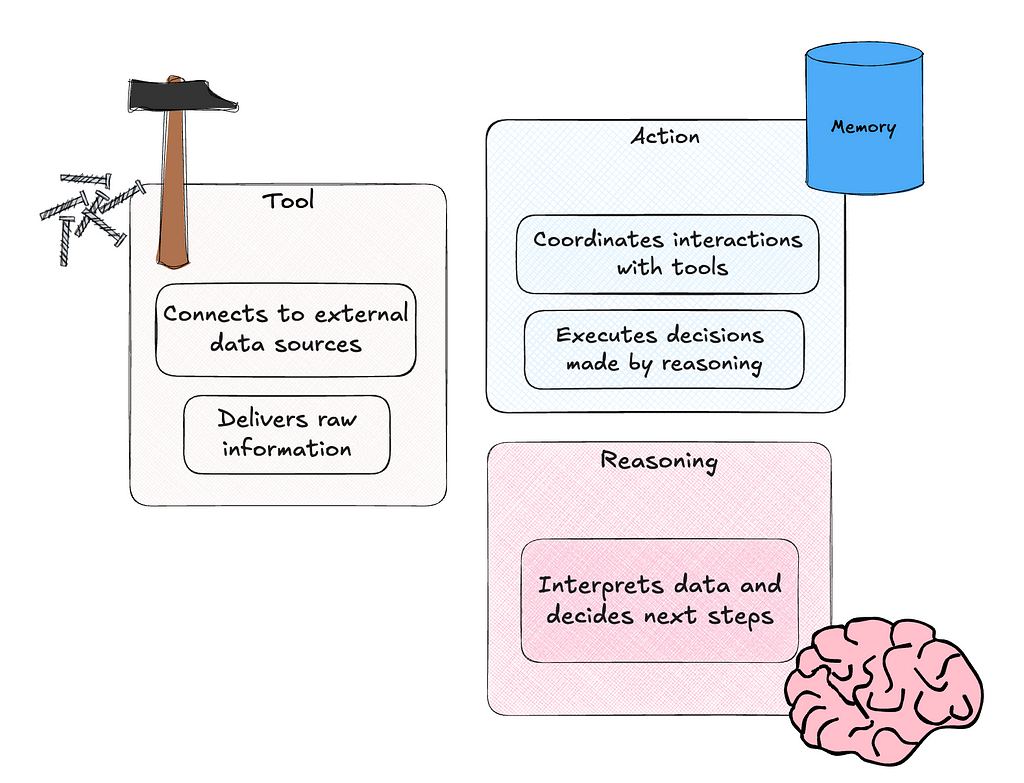

Tools:

-Extending the Agent’s Reach

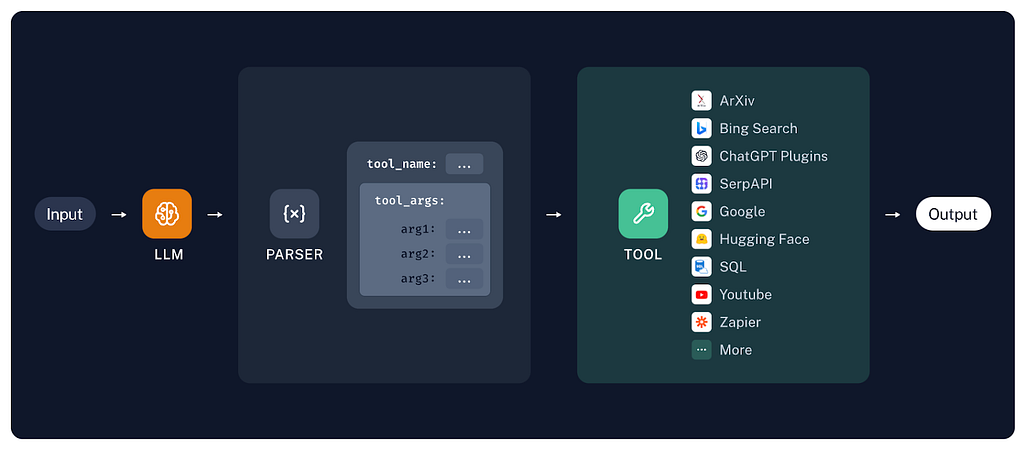

Once an agent decides on an action, it doesn’t execute it in isolation. This is where tools come into play. Tools are the actual mechanisms — APIs, data preprocessing functions, or time awareness — that allow an agent to interact with its environment. I found it fascinating (and a bit counterintuitive) that the true power of these systems often lies not in the abstract reasoning of the LLM but in the practical, tangible capabilities of these tools. They bridge the gap between digital thought and real-world action, making the system even more robust.

There are two main approaches to using tools:

- Chains: Allow you to create a predefined sequence of tool usages.

In our train booking scenario, the chain might start with an API call to a train schedule database that retrieves available journeys, followed by another API call to a booking service to reserve the seat, and finally a call to an email service API to dispatch the train ticket to the user.

- Agents: Enable the model to loop through tool usage, deciding dynamically how many times to call upon a tool.

For example, the train booking agent might repeatedly query different train schedule APIs to find the best match or check seat availability, and then dynamically select the most appropriate booking and notification services based on real-time data.

Agents Architectures:

-Designing for Use Cases

The choice of agent architecture is critical to match the system’s needs. As complexity grows, so does the challenge of managing tool overload, context size, and the need for specialized roles (e.g., planner, researcher, math expert).

Why Agent Architectures Matter:

- Modularity: Independent agents simplify development, testing, and maintenance.

- Specialization: Tailor agents to focus on specific domains, improving overall performance.

- Control: Explicitly design communication between agents rather than relying solely on ad hoc function calls.

Some common Multi-Agent Architectures:

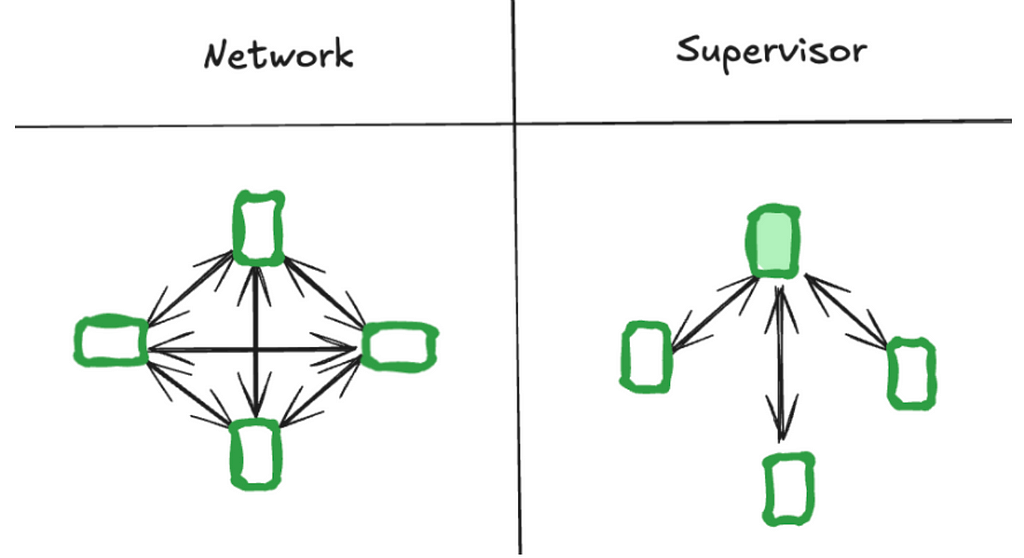

Network Architecture:

- Concept: Each agent can communicate with every other agent.

- Use Case: Problems with no clear hierarchy.

Supervisor Architecture:

- Concept: A single supervisor node directs which agent should act next.

- Use Case: When centralized decision-making can balance complex interactions.

Additional Considerations from Anthropics:

Agents vs. Workflows:

- Workflows: Predefined paths that offer consistency and predictability.

- Agents: Dynamic systems where the LLM directs its own process, ideal for open-ended tasks.

When to Use Agents?

- For tasks with unpredictable steps.

- When dynamic tool usage and flexible decision-making are needed.

- Where continuous feedback from the environment (or human oversight) is critical.

Conclusion: Embrace the Future of Modular AI

Reflecting on this journey, one of the most profound insights I gained is how our roles as ML engineers are evolving. In my early academic days, the focus was on model fitting, selection, and managing data pipelines. However, diving into the world of LLMs and multi-agent architectures has reshaped my perspective entirely. Today, it’s more about crafting a flexible, modular ecosystem where every component can grow, adapt, and contribute to a shared intelligence.

As you embark on your own adventures in this dynamic field, I invite you to see modularity as more than just a technical strategy. From where I see it, the future of AI is rooted in collaboration — the seamless interplay of many elements working in harmony. This was a lesson I learned during my very first multi-agent project, and it continues to inspire me every day.